HappyLife

Where Books are learned in 15 minutes, Podcasts in 5 minutes and Ted Talks in 3 minutes.

I used to read 2 books a month on my kindle, with Happylife i changed the habbit and learned key insights in my commute that turned into actionable ideas we could implement.

Happylife changed how I learn in my busy hectic scheduele. I listen to Ted Talks, Podcast and business books I can deploy learnings from daily.

Accenture has rolled out Happylife to select employees, and their insights and learnings helped their consultancy work and enabled new ideas to foster.

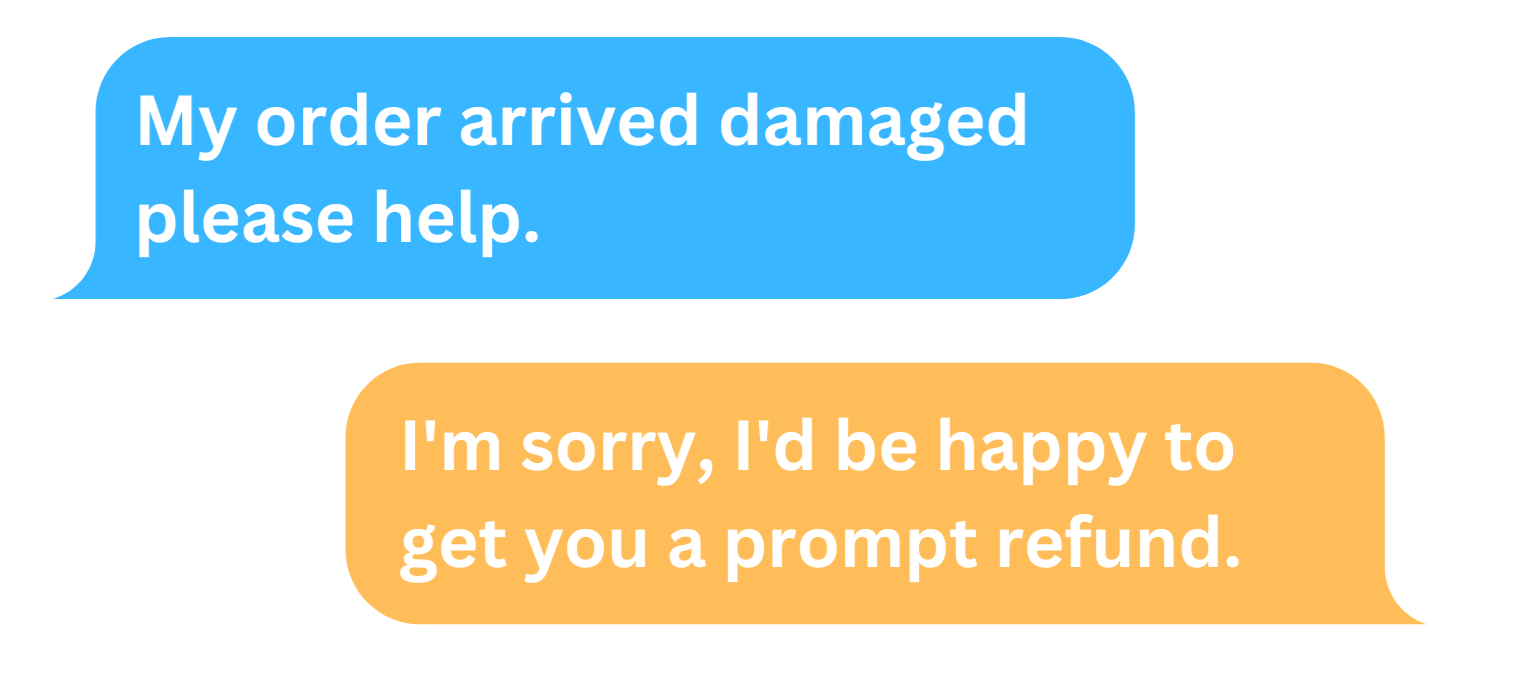

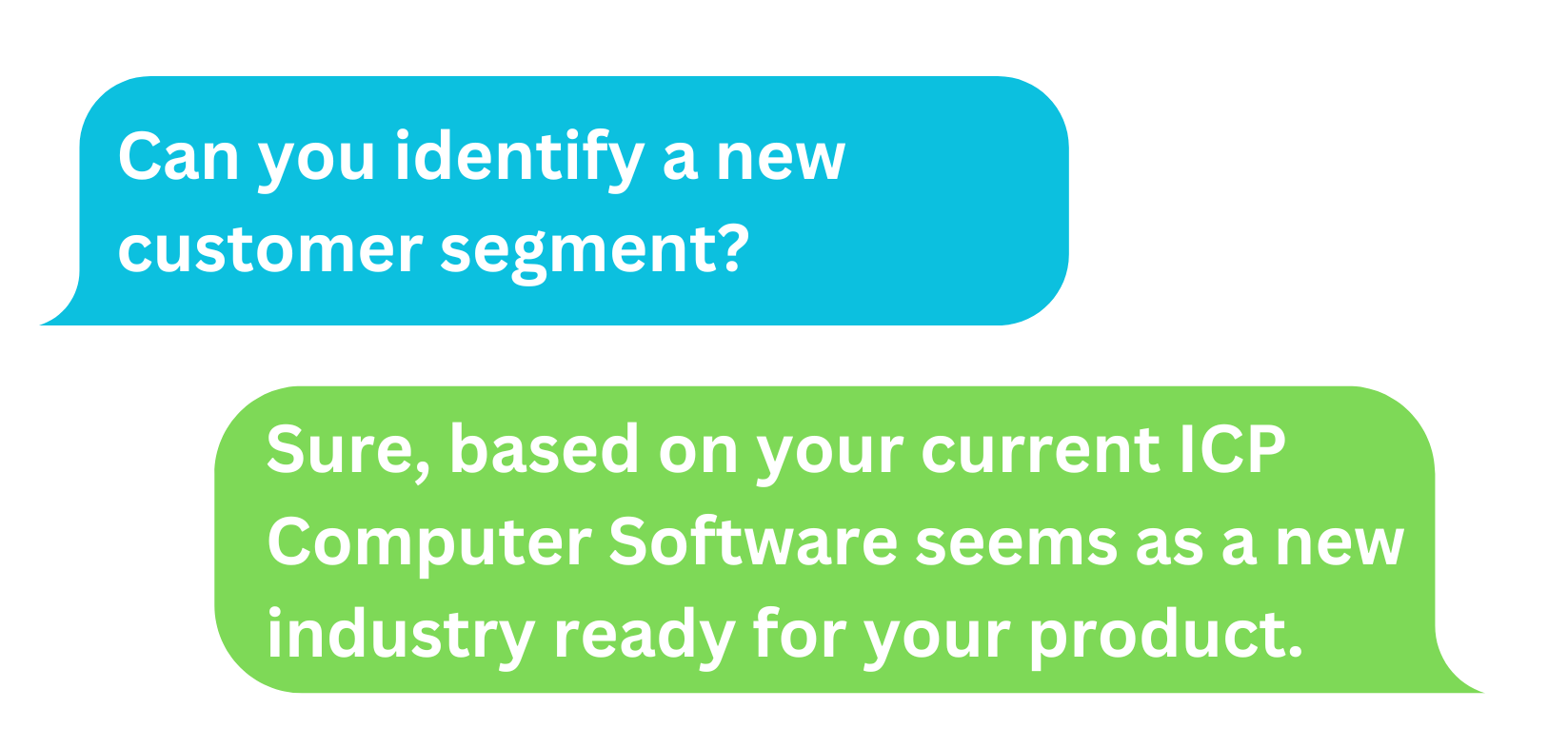

SuisseGPT excels as a delightful corporate representative, a diligent research assistant, an innovative creative collaborator, a proficient task automator, and beyond. Its personality, tone, and behavior can be seamlessly tailored to meet your specific requirements and preferences.

With minimal effort, SuisseGPT can be integrated into any product or toolchain you are developing.

Thanks to the implementation of Constitutional AI and harmlessness training, you can trust SuisseGPT to accurately represent your company and its needs. SuisseGPT has been programmed to gracefully handle even potentially disagreeable or harmful conversational scenarios.

SuisseGPT is perpetually available to you or your customers as required, supported by servers designed to scale and meet demanding workloads. Utilize the Power of SuisseGPT

Put SuisseGPT to work

FAQs

Yes, SuisseGPT uses industry-standard best practices for data handling and retention. See our Privacy Policy for more details. All commercial deployments are covered by SuisseGPT's Data Protection Addendum, which is available upon request.

Our API is designed to be a backend that incorporates SuisseGPT into any application you’ve developed. Your application sends text to our API, then receives a response via server-sent events, a streaming protocol for the web. We have API documentationwith drop-in example code in Python and Typescript to get you started.

We currently offer two versions of SuisseGPT.

SuisseGPT - our most powerful model, which excels at a wide range of tasks from sophisticated dialogue and creative content generation to detailed instruction following.

SuisseGPT Instant - a faster and cheaper yet still very capable model, which can handle a range of tasks including casual dialogue, text analysis, summarization, and document question-answering.

You can find our model pricing here.

SuisseGPT has the most training in English, but also performs well in a range of other common languages, and still has some ability to communicate in less common languages. SuisseGPT also has extensive knowledge of common programming languages!

No. SuisseGPT is designed to be self-contained, and will respond without searching the internet. You can, however, provide SuisseGPT with text from the internet and ask it to perform tasks with that content.

Constitutional training is a process for training a model to adhere to a “constitution” of desired behavior. The core Suisse AI model has been finetuned with constitutional training with the goal of becoming helpful, honest, and harmless. You can learn more about constitutional training here.

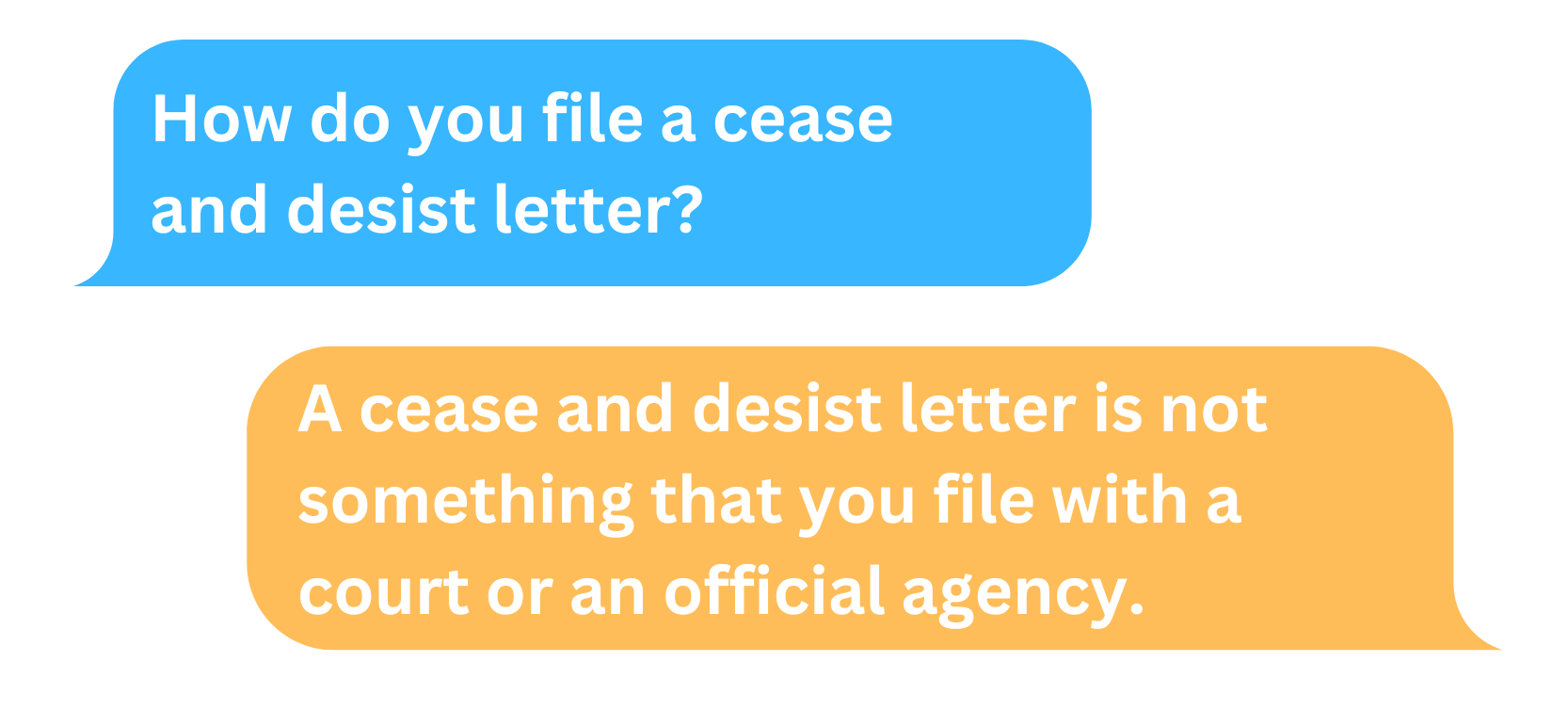

Helpful, Honest, and Harmless (HHH) are three components of building AI systems (like SuisseGPT) that are aligned with people’s interests.

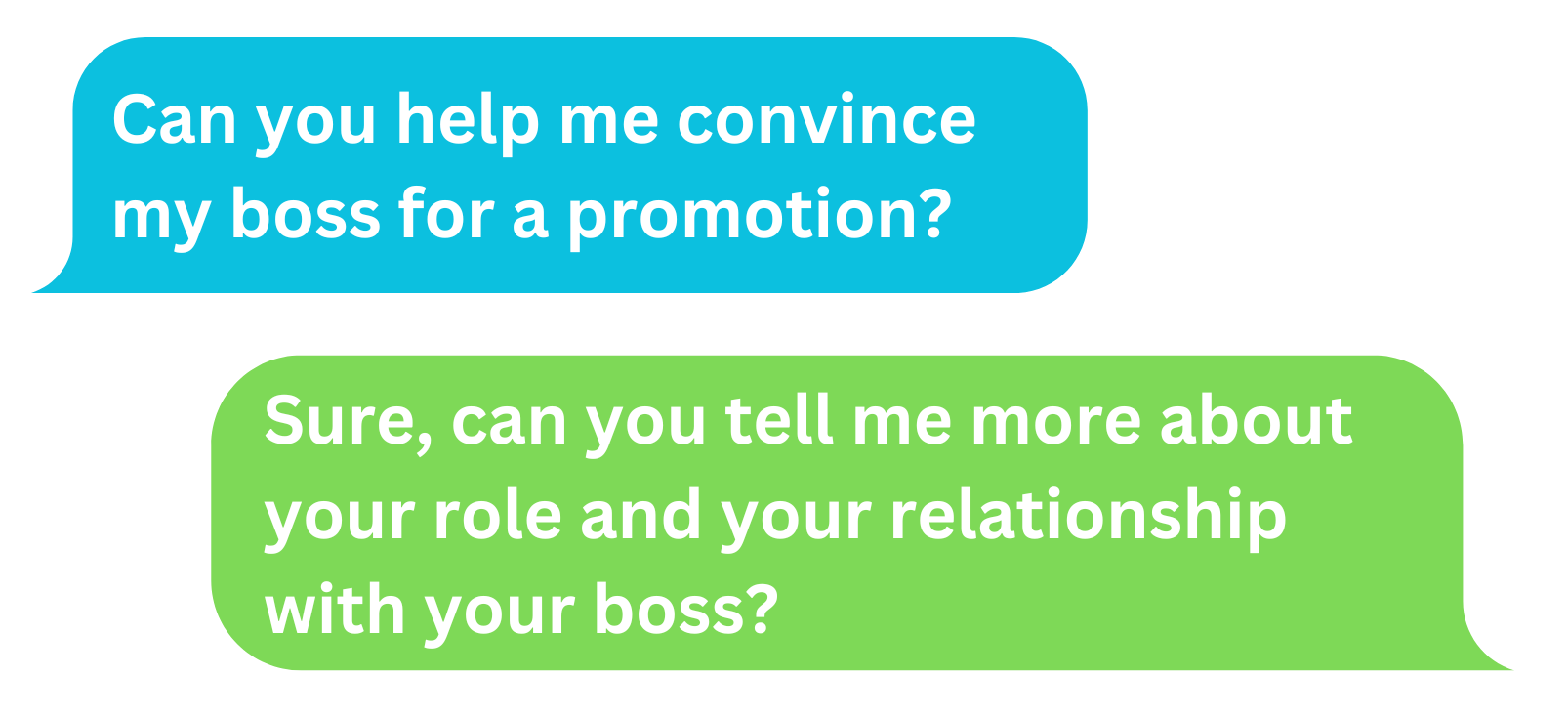

- Helpful: SuisseGPT wants to help the user

- Honest: SuisseGPT shares information it believes to be true, and avoids made-up information

- Harmless: SuisseGPT will not cooperate in aiding the user in harmful activities

While no existing model is close to perfection on HHH, we are pushing the research frontier in this area and expect to continue to improve. For more information about how we evaluate HHH in our models, you can read our paper here.

SuisseGPT’s behavior can be extensively modified using prompting. Prompts can be used to explain the desired role, task, and background knowledge, as well as a few examples of desired responses.

In the vast majority of cases, we believe well-crafted prompts will get you the results you want without the expense or delay of fine-tuning. However, some large enterprise users may significantly benefit from fine-tuned models. Please contact us to discuss whether your needs might be best addressed with a fine-tuned model by filling out an email.

The combined context window for input and output is about 100,000 tokens, which works out to roughly 70,000 words, depending on the type of content.

Not at this time! We find the open source SBERT embeddings to be good enough for most purposes.